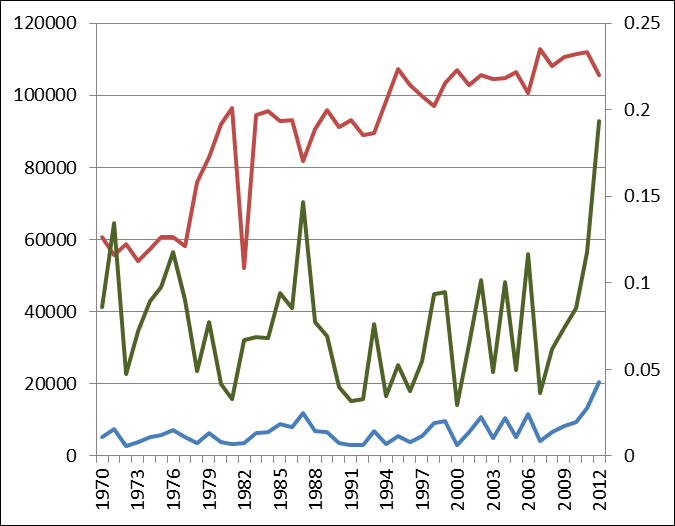

In terms of NFL averages, completion percentage is way up, interception rate is way down, pass attempts are way up, and the passing game has never been more valuable. We all know that. But sometimes, when everyone is zigging, a lone team might be better off zagging.

The question here is does that theory apply to trying to build an offense that revolves around a power running game? Defenses are looking for lighter and faster defensive ends and linebackers who can excel in pass coverage; just about every defense is taking linebackers off the field for defensive backs more than they did a decade ago. And defenses spend the majority of their practice reps focusing on stopping the pass, too. As defenses try to become faster, quicker, and lighter — and better against the pass — should a team try to respond by developing a power running game?

On one hand, it’s tempting to say of course that model could work: just look at the Seahawks and Cowboys. Seattle does have a dominant running game, of course; what the Seahawks did to the Giants last year is not safe for work. But Seattle also has Russell Wilson, perhaps the most valuable player in the league when you combine production, position, and salary. And the best defense in the NFL. So yes, the Seahawks are successful with a power running game, but that’s not really a model other teams can follow. And for all the team’s success, Seattle doesn’t even have a very good offensive line, which would seem to be the number one focus for a team that is trying to build a power running attack.

The team with the best offensive line in the NFL is probably in Dallas. But the Cowboys also have Tony Romo and Dez Bryant, so again, that’s not really a model capable of imitation.

I’m thinking about some of the teams in the middle class of the AFC — the Bills, the Jets, the Browns, the Texans — teams that are currently trying the all defense, no quarterback approach. Finding a quarterback is the most difficult thing there is to do in the NFL, and these four teams can attest to that. By trading for LeSean McCoy, it appears as though Buffalo is trying to do what this article implies, but there are two problems with that plan. One, the Bills have one of the worst offensive lines in the NFL, and two, McCoy is not necessarily the right guy to build a move-the-chains style of offense.

The Jets have invested a ton of money in their offensive line, courtesy of hitting on first round draft picks in 2006 with Nick Mangold and D’Brickashaw Ferguson, and spending to acquire mid-level free agents from Seattle (James Carpenter this year after Breno Giacomini last offseason). But the Jets offensive line is far from dominant, and the team isn’t really building around a power running game (the team’s top two tight ends are below-average blockers, and the Jets are investing more in wide receivers than running backs).

Houston is an interesting case, because the Texans led the NFL in rushing attempts last year. The Texans do have a very good run-blocking offensive line and Arian Foster, but it still feels like that’s just not enough. Houston’s efficiency numbers were harmed by giving carries to Alfred Blue — the Texans were 8-5 when Foster was active — but the team also doesn’t have much in the way of run blockers at tight end or fullback. [continue reading…]